External Monitor Not Using GPU

Graphics card plays an important role in computer image quality. There are two main graphics cards on the market: integrated card and dedicated graphics card. The former is cheaper and less consuming, and the latter is more preferred by game holic and professional designers.

Some of you might find your computer is not using GPU when connected to an external monitor. Don’t worry. It is normal because the external monitor doesn’t use GPU by default. In this guide, we will offer three available solutions to you.

MiniTool ShadowMaker TrialClick to Download100%Clean & Safe

How to Fix External Monitor Not Using GPU on Windows 10/11?

Fix 1: Reinstall the Graphics Driver

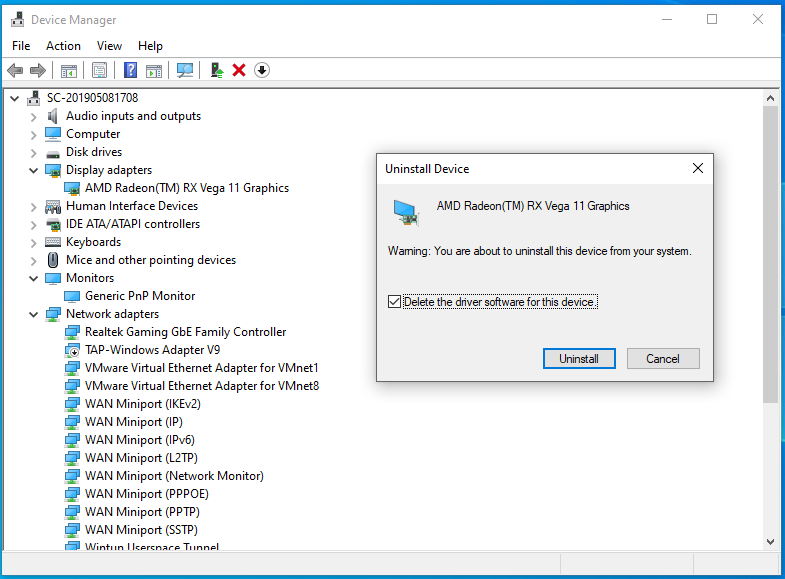

A faulty graphics driver might be a possible culprit for external monitor not using your GPU. If this is the case, it is a good option to reinstall your graphics card. To do so:

Step 1. Right-click on the Start icon and select Device Manager from the quick menu.

Step 2. Expand Display adapter to find your graphics card and then right-click on it to select Uninstall device.

Step 3. In the confirmation window, tick Delete the driver software for this device and hit Uninstall.

Step 4. Restart your computer and then Windows will install a new graphics driver automatically. Also, you can download the driver from the manufacturer’s website and install it manually.

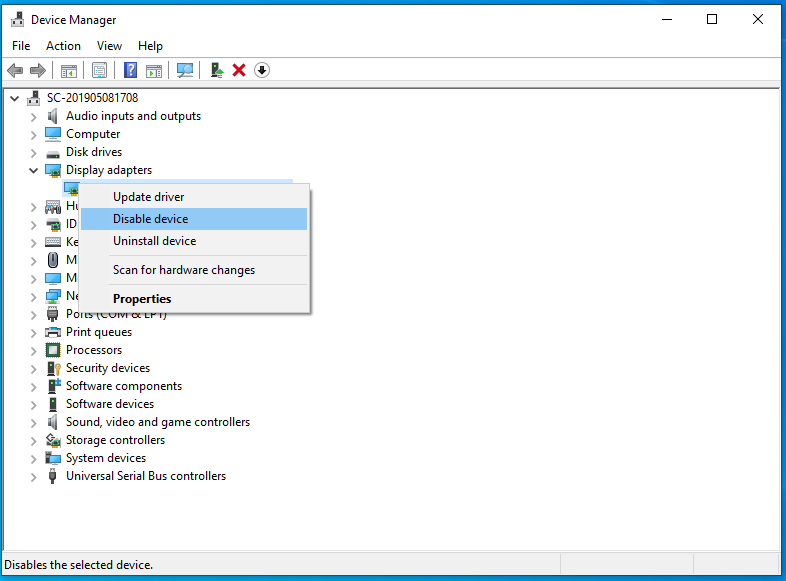

Fix 2: Disable Integrated Graphics Card Drivers

To address external monitor not using your GPU, disabling the integrated graphics card on the PC might be a good idea. In doing so, the only graphics card being detected is the dedicated one. To do so:

Step 1. Press Win + R to open the Run box.

Step 2. Type devmgmt.msc and hit Enter to launch Device Management.

Step 3. Expand Display adapters and right-click on the integrated graphics card driver to select Disable device.

Step 4. Click on Yes to confirm the action. After completion, external monitor not using dedicated graphics card might be gone.

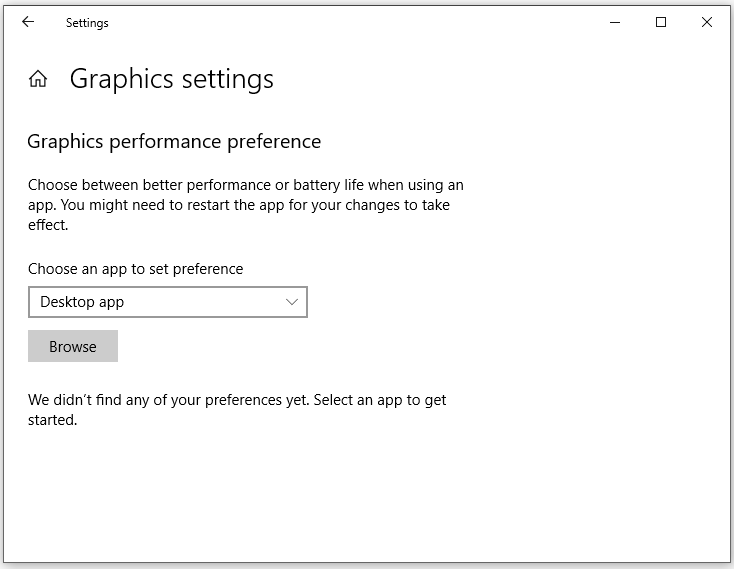

Fix 3: Set a Preferred GPU

Usually, when you start a demanding application such as a video game, your laptop will be forced to switch to a dedicated GPU. If your computer continues to use the integrated card when running a heavy application, follow these steps to set your dedicated graphics card as the preferred one for it:

Step 1. Type graphics settings in the search bar and hit Enter.

Step 2. Click on Desktop App and hit Browse to select the application. If the application is not in the list, find the executable files.

Step 3. Click on Options, set the application to your preferred GPU and save the changes.

Step 4. Relaunch the application to see if external monitor using integrated graphics card disappears.

For NVIDIA Graphics Card:

Step 1. Open NVIDIA Control Panel and select Management 3D Settings.

Step 2. Under the Program Settings tab, select the application that you want to use for your GPU.

Step 3. Set Preferred graphics processor to High-performance Nvidia processor.

Final Words

This is the end of solutions for external display not using GPU. Sincerely hope that you can have a seamless and smooth image processing experience!